AidGenSE RAG Service Deployment Guide

Introduction

RAG (Retrieval-Augmented Generation) is a technical architecture that combines information retrieval and text generation, widely used in generative AI scenarios such as question-answering systems, enterprise search, and document assistants. Its basic idea is to retrieve relevant information from external knowledge sources before generating answers, thereby improving the accuracy and controllability of responses.

AidGenSE has built-in RAG-related components, making it convenient for developers to quickly deploy their own RAG applications.

Support Status

Currently includes two built-in demo knowledge bases

| Knowledge Base Name | Embedding Model Used |

|---|---|

| Tesla User Manual | BAII/bge-large-zh-v1.5 |

| Car Maintenance Manual | BAII/bge-large-zh-v1.5 |

Quick Start

Installation

Install AidGenSE related components, please refer to AidGenSE Installation

Install RAG components

# Install RAG service

sudo aid-pkg update

sudo aidllm install ragView Available Vector Knowledge Bases

aidllm remote-list ragExample output:

Name EmbeddingModel CreateTime

---- ---------------------------- --------------------

tesla BAII/bge-large-zh-v1.5 2025-04-14 09:59:43

mechanical BAII/bge-large-zh-v1.5 2025-04-14 09:59:43Download Specified Knowledge Base

# Download specified knowledge base

aidllm pull rag [rag_name] # e.g: tesla

# View downloaded knowledge bases

aidllm list rag

# Delete downloaded knowledge base

sudo aidllm rm rag [rag_name] # e.g: teslaStart Service

# Start with specified model

aidllm start api -m <model_name>

# Start specified knowledge base

aidllm start rag -n <rag_name>Chat Testing

Using Web UI for chat testing

# Install UI frontend service

sudo aidllm install ui

# Start UI service

aidllm start ui

# Check UI service status

aidllm status ui

# Stop UI service

aidllm stop ui💡Note

After the UI service starts, access http://ip:51104

Using Python for chat testing

import os

import requests

import json

RAG_PROMPT = '''

You are an intelligent assistant. Your goal is to provide accurate information and help questioners solve problems as much as possible. You should remain friendly but not overly verbose. Please answer relevant queries based on the provided context information without considering existing knowledge. If no context information is provided, please answer relevant queries based on your knowledge.

Context content:

{response}

Extract and answer questions related to "{question}".

'''

def rag_query(question):

url = "http://127.0.0.1:18111/query"

# Set RAG knowledge base

rag_name = "<rag_name>"

headers = {

"Content-Type": "application/json"

}

payload = {

"text": question,

"collection_name": rag_name,

"top_k": 1,

"score_threshold": 0.1

}

response = requests.post(url, headers=headers, json=payload)

if response.status_code == 200:

result = response.json()

answer = ""

if len(result['data']) > 0:

answer = result['data'][0]['text']

return RAG_PROMPT.format(response=answer, question=question)

return question

def stream_chat_completion(messages, model="<model_name>"): # Set large model

url = "http://127.0.0.1:8888/v1/chat/completions"

headers = {

"Content-Type": "application/json"

}

payload = {

"model": model,

"messages": messages,

"stream": True # Enable streaming

}

# Make request with stream=True

response = requests.post(url, headers=headers, json=payload, stream=True)

response.raise_for_status()

# Read line by line and parse SSE format

for line in response.iter_lines():

if not line:

continue

# print(line)

line_data = line.decode('utf-8')

# Each SSE line starts with "data: " prefix

if line_data.startswith("data: "):

data = line_data[len("data: "):]

# End marker

if data.strip() == "[DONE]":

break

try:

chunk = json.loads(data)

except json.JSONDecodeError:

# Print and skip when parsing fails

print("Unable to parse JSON: ", data)

continue

# Extract model output token

content = chunk["choices"][0]["delta"].get("content")

if content:

print(content, end="", flush=True)

if __name__ == "__main__":

# Set question

user_input = "<question>"

rag_query = rag_query(user_input)

print("user input:", user_input)

print("rag query:", rag_query)

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": rag_query}

]

print("Assistant:", end=" ")

stream_chat_completion(messages)

print() # New lineExample: Using Qwen2.5-3B-Instruct with Vehicle Manual Knowledge Base on Qualcomm 8550

- Install AidGenSE and RAG components

# Install aidgense

sudo aid-pkg -i aidgense

# Install RAG service

sudo aidllm install rag- Download

Qwen2.5-3B-Instructmodel and vehicle manual knowledge base

# Download model

aidllm pull api aplux/qwen2.5-3B-Instruct-8550

# Download vehicle manual knowledge base

aidllm pull rag tesla- Start services

# Start with specified model

aidllm start api -m qwen2.5-3B-Instruct-8550

# Start specified knowledge base

aidllm start rag -n tesla- Use Web UI for chat testing

# Install UI frontend service

sudo aidllm install ui

# Start UI service

aidllm start uiAccess http://ip:51104

- Use Python for chat testing

import os

import requests

import json

RAG_PROMPT = '''

You are an intelligent assistant. Your goal is to provide accurate information and help questioners solve problems as much as possible. You should remain friendly but not overly verbose. Please answer relevant queries based on the provided context information without considering existing knowledge. If no context information is provided, please answer relevant queries based on your knowledge.

Context content:

{response}

Extract and answer questions related to "{question}".

'''

def rag_query(question):

url = "http://127.0.0.1:18111/query"

# Set RAG knowledge base

rag_name = "tesla"

headers = {

"Content-Type": "application/json"

}

payload = {

"text": question,

"collection_name": rag_name,

"top_k": 1,

"score_threshold": 0.1

}

response = requests.post(url, headers=headers, json=payload)

if response.status_code == 200:

result = response.json()

answer = ""

if len(result['data']) > 0:

answer = result['data'][0]['text']

return RAG_PROMPT.format(response=answer, question=question)

return question

def stream_chat_completion(messages, model="qwen2.5-3B-Instruct-8550"): # Set large model

url = "http://127.0.0.1:8888/v1/chat/completions"

headers = {

"Content-Type": "application/json"

}

payload = {

"model": model,

"messages": messages,

"stream": True # Enable streaming

}

# Make request with stream=True

response = requests.post(url, headers=headers, json=payload, stream=True)

response.raise_for_status()

# Read line by line and parse SSE format

for line in response.iter_lines():

if not line:

continue

# print(line)

line_data = line.decode('utf-8')

# Each SSE line starts with "data: " prefix

if line_data.startswith("data: "):

data = line_data[len("data: "):]

# End marker

if data.strip() == "[DONE]":

break

try:

chunk = json.loads(data)

except json.JSONDecodeError:

# Print and skip when parsing fails

print("Unable to parse JSON: ", data)

continue

# Extract model output token

content = chunk["choices"][0]["delta"].get("content")

if content:

print(content, end="", flush=True)

if __name__ == "__main__":

# Set question

user_input = "What should I do if I lost my car key?"

rag_query = rag_query(user_input)

print("user input:", user_input)

print("rag query:", rag_query)

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": rag_query}

]

print("Assistant:", end=" ")

stream_chat_completion(messages)

print() # New lineCreating Custom RAG Knowledge Base

I. Preparation

Register Account

Visit AidLux Official Website to register and log in to an Aidlux Account.Login to aidllm-cms

Open your browser and visit https://aidllm.aidlux.com, log in with your Aidlux account.

II. Create RAG Knowledge Base

Enter Knowledge Base Management Interface

After logging in, click "Knowledge Base" menu on the left to enter the management page, then click "New".

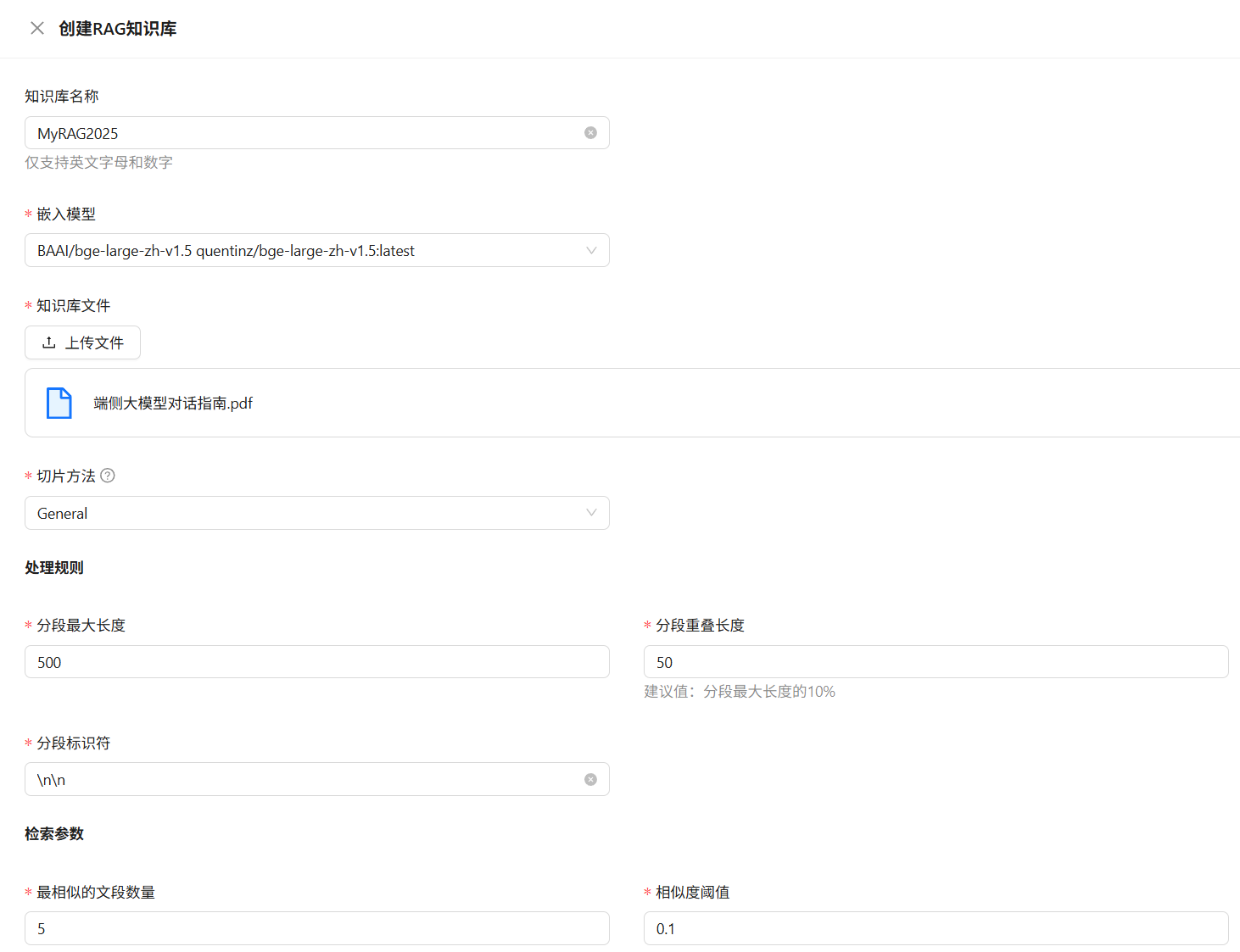

Fill in Knowledge Base Information

Name: Only supports English letters and numbers (e.g.,

MyRAG2025).Embedding Model: Select the currently loaded embedding model.

Chunking Method: Choose

GeneralorQ&A, specific descriptions as follows:Chunking Method Supported Formats Description GeneralText (.txt / .pdf) Split continuous text by "segment identifiers", then merge by token count not exceeding "max length" into chunks. Q&A.xlsx / .csv / .txt For Q&A format: Excel two columns (no header: question/answer); CSV/TXT use Tab separator, UTF-8 encoding.

Notes

- Created knowledge bases are private by default and only visible to yourself.

- When using command-line tools, you can only view public knowledge bases and your own private knowledge bases.

III. Knowledge Base Service Startup

Login to Remote CMS

bashaidllm loginUse your registered Aidlux account to log in.

This operation is only for executing remote knowledge base related commands.View Knowledge Base List

bashaidllm remote-list rag Name EmbeddingModel CreateTime aidluxdocs BAAI/bge-large-zh-v1.5 2025-07-09 14:56:31 MyRAG2025 BAAI/bge-large-zh-v1.5 2025-07-21 16:08:14Pull Knowledge Base

bashaidllm pull rag <knowledge_base_name> aidllm pull rag MyRAG2025Start RAG Service

bashaidllm start rag -n <rag_name> Use rag: MyRAG2025 Use model: bge-large-zh-v1.5 Rag server starting... Rag server starting... Rag server starting... Rag server starting... Rag server starting... Rag server starting... Rag server start successfully.After success, the local knowledge retrieval service will be started.