AI Model Optimizer User Guide

Introduction

AI Model Optimizer (AIMO) is a web-based interactive AI model deployment and optimization platform designed to help users quickly migrate, deploy, and run various machine learning models on edge chipsets. AIMO can convert models from other mainstream frameworks into multiple formats such as TFLite, ONNX, DLC, and more.

AIMO provides two deployment methods:

- SaaS-based cloud service (AIMO Online)

- Private Docker deployment (Contact Sales)

AIMO integrates various model conversion tools to simplify the conversion process, allowing users to complete model conversion with zero code and low cost. Additionally, for Qualcomm platforms, AIMO includes extra operator libraries to improve the success rate of model conversion. For models containing UDO operators, they must be used with the AidLite SDK inference engine (see AidLite SDK Developer Docs for details).

How to Use

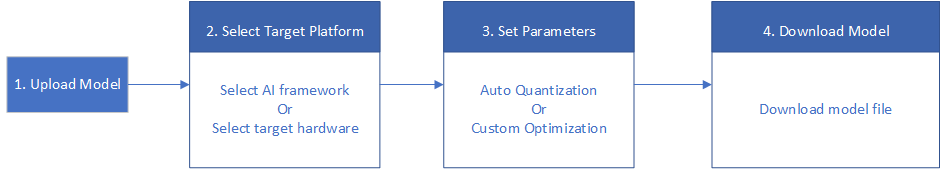

The AIMO workflow is shown in the diagram below:

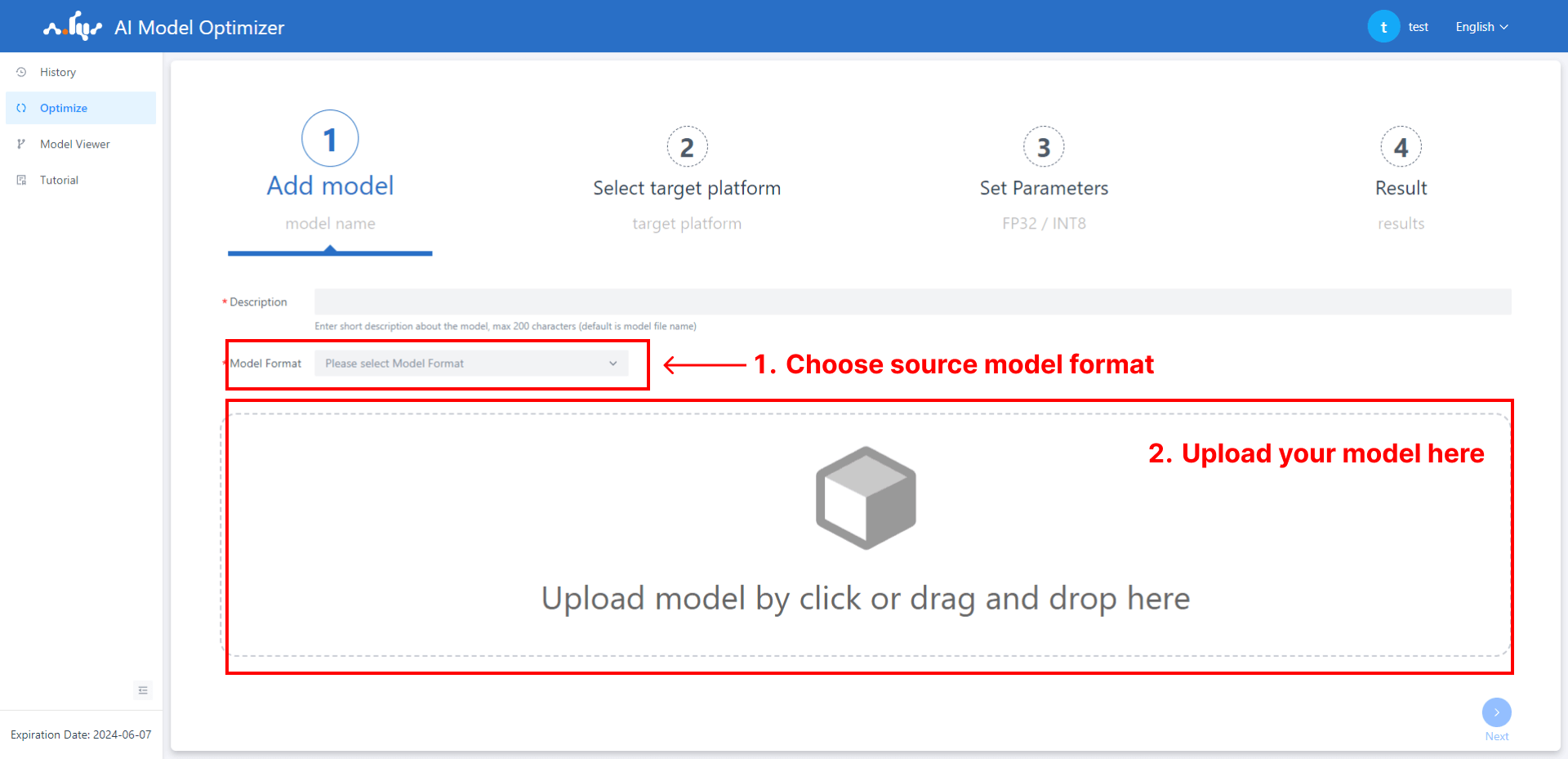

Step 1: Upload Model

First, select the source model format, then drag and drop or click to upload the model to AIMO.

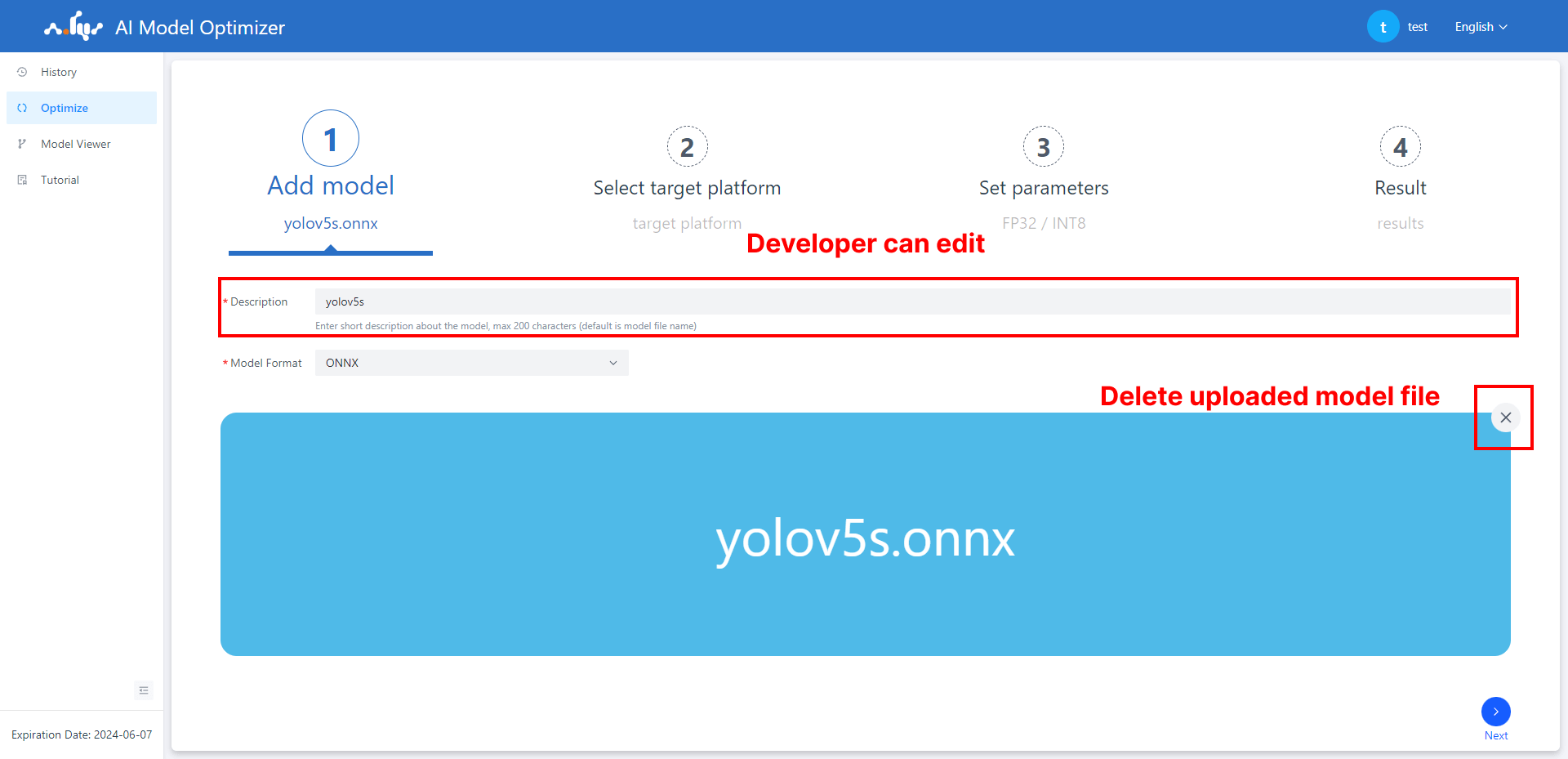

After a successful upload, the page will appear as shown below. The Description field will be auto-filled based on the model filename, and developers can manually edit it.

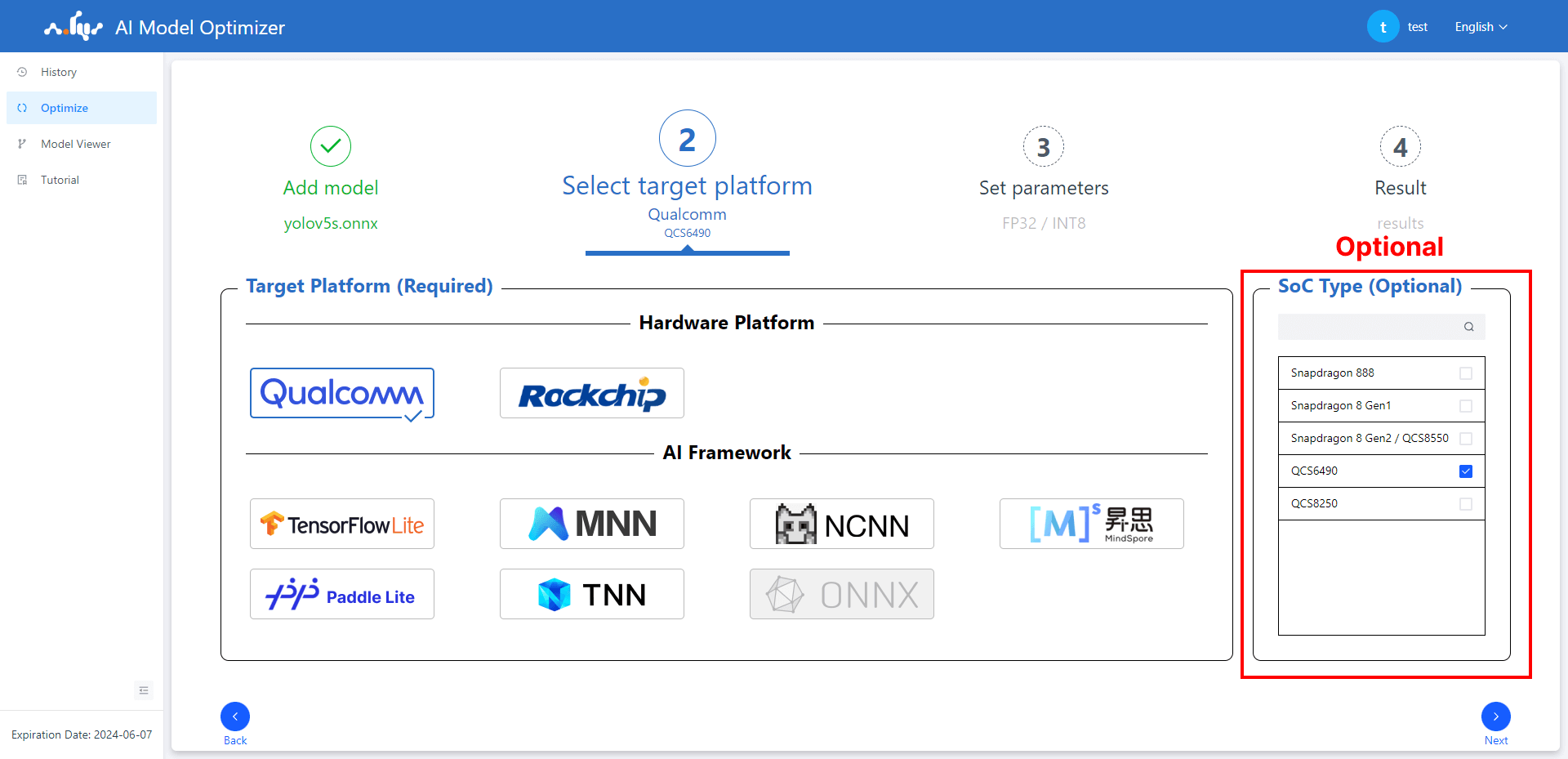

Step 2: Select Target Platform

In this step, select the target platform. When the developer selects an option from the Hardware Platform, the Chip Model section on the right will display the supported models, as shown below:

💡Note

The Chip Model is optional. If a specific hardware model is provided, AIMO can perform better hardware-specific optimization.

Step 3: Set Parameters

Based on the source model format, the optimization interface may include the following options:

- Network Simplifying: Simplifies the source model structure into ONNX format. This option appears only when the source model is ONNX.

- Auto Cut Off: Automatically truncates the model based on the network type, applicable to models with fixed truncation patterns.

- Auto Quantization: Currently available for some source model formats only.

- Customize Mode: For advanced custom optimization settings.

💡Note

AIMO performs automatic quantization optimization based on its quantization strategy. This is the recommended method by AIMO and is suitable for beginners.

Step 4: Download Model

Once the model optimization completed, user can download the optimized model.

The optimization and conversion time may vary—from just a few seconds to several days—depending on the chosen quantization and optimization settings.

Conversion Failed

If the conversion fails, the Process Log will show detailed error messages. Developers can refer to these logs to identify the cause of the failure.

Developers can refer to the FAQ to help diagnose the issue.

Example

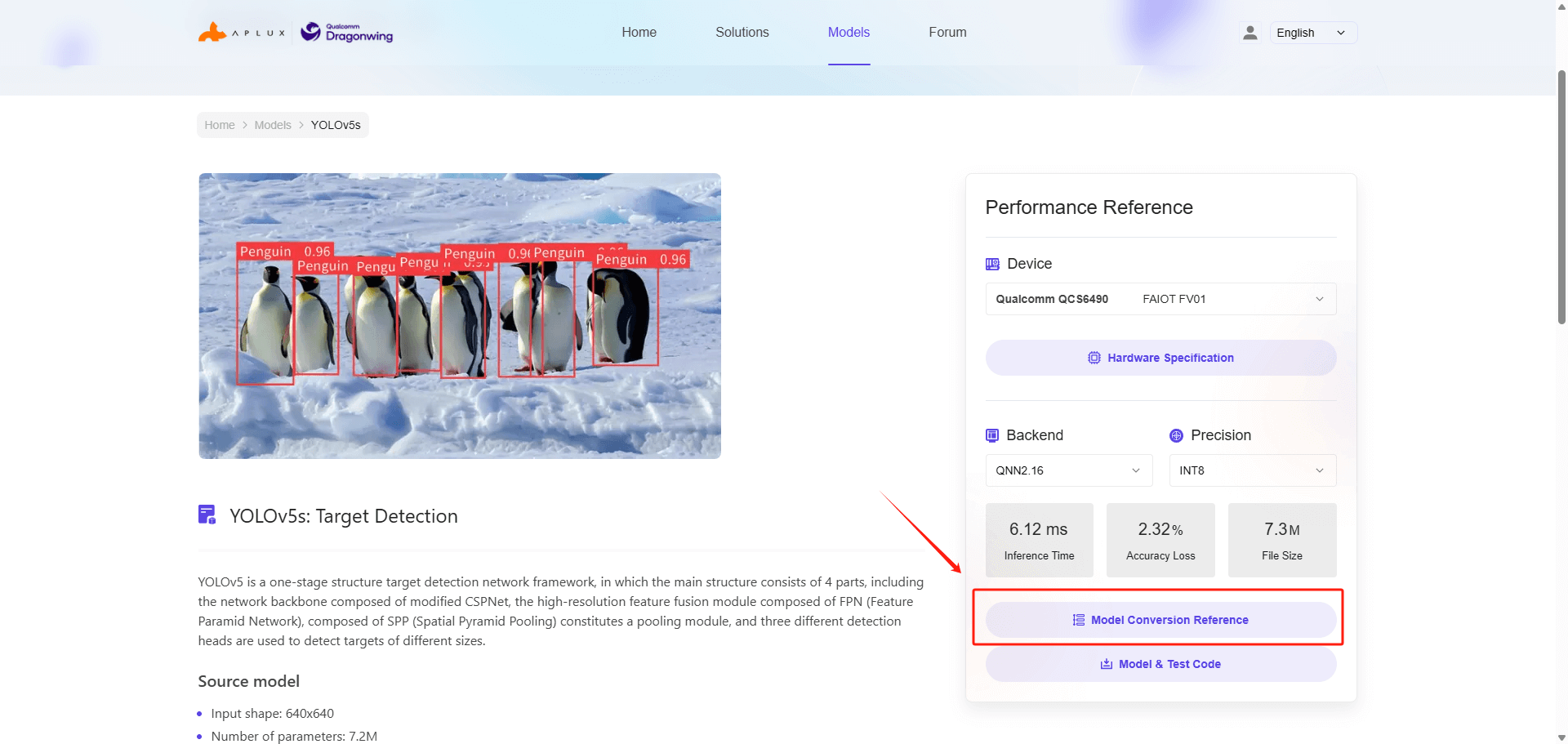

Model Farm provides a large collection of models converted via AIMO for Qualcomm platforms. Developers can view the AIMO conversion steps in the model details page for reference.

Taking YOLOv5s INT8 as an example:

Visit Model Farm

Log in developer account

Search for YOLOv5s

Enter the model detail page and click the Model Conversion Reference button under the performance section on the right